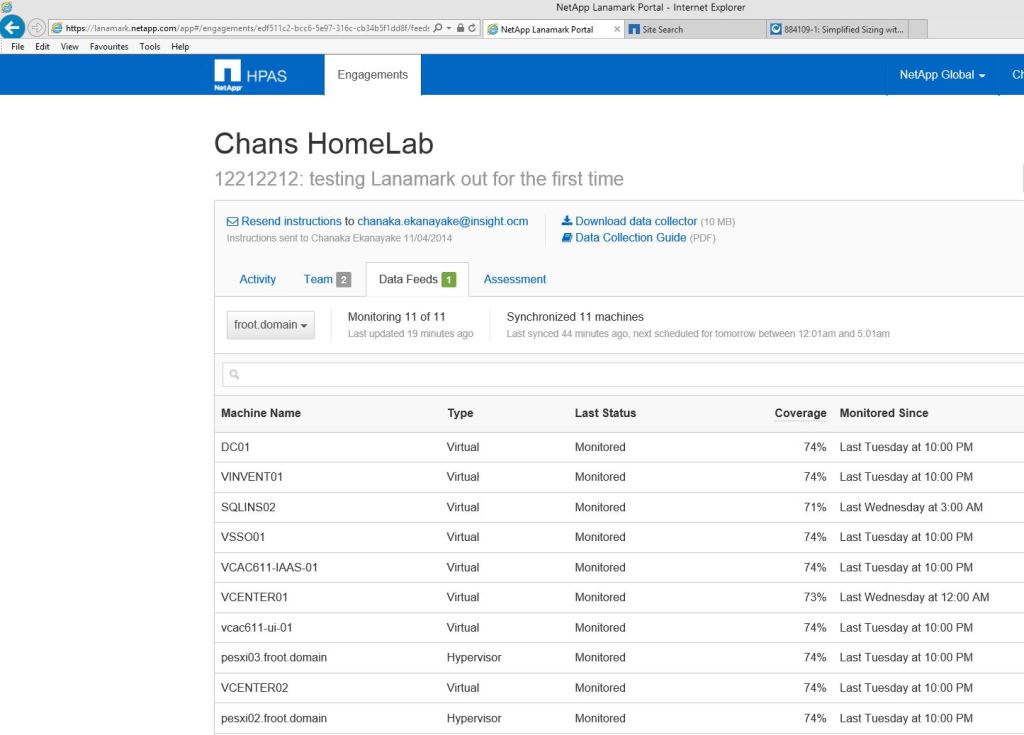

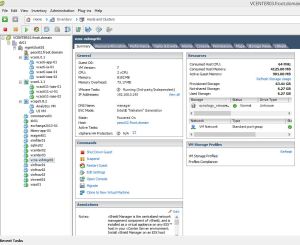

I have a VMware Home Lab with 3 ESXi whiteboxes which is kind of the life blood of most of my VMware related studying and new product deployment experience. As I’m in a presales role, I don’t often get to go out and deploy every single product I talk about in an enterprise IT environment everytime (I do limited deployment work every now and then) and having a decent lab where I can simulate an enterprise IT infrastructure where I can deploy any VMware (or any other part of the SDDC for that matter) is absolutely essential for me to function successfully in my job. So, due of this necessity, I maintain my own lab in my little garage at home and currently I have 3 ESXi servers as follows

- Dedicated Management cluster – 1 ESXi whitebox

- 1 x Intel Xeon E3-1230 4C CPU @ 3.20GHz with HT (8 threads), 32 GB RAM, SuperMicro X8SIL motherboard, 1 x Dual port Intel 1000mbps NIC card

- A Compute cluster – 2 ESXi whiteboxes

- 1 x Intel Xeon X3450 4C CPU @ 2.67GHz with HT (8 threads), 32 GB RAM, Gigabyte Z68AP-D3 motherboard, 1 x Dual port Intel 1000mbps LOM

- 1 x Intel Core i7 950 4C CPU @ 3.10 GHz with HT (8 threads), 32 GB RAM, Gigabyte motherboard, 1 x Dual port Intel NIC card

I also have a Synology 412+ as a shared SAN (iSCSI & NFS – in VMware’s HCL also) and a Cisco 3560G Gigabit switch -L3 enabled for storage and networking

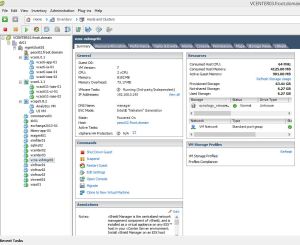

As you can see, my management cluster is jam packed with lots of VM’s some of which have large resource assignments (i.e. vCops VM’s are 8GB each). There’s heavy ballooning happening already and that was even after I’ve had to shut some VM’s down temporarily (I don’t like to keep key VM’s powered down, I’d like them to be running 24×7 to pretty much simulate a real production behaviour).

When I deploy new VMware products, I usually deploy them in a distributed architecture rather than the default all in one server kinda way to simulate a enterprise scalable deployment and this is obviously sucking up lot of resources, mainly memory in my poor 1 server management cluster. Add on top, things like VMware vCAC and NSX demanding dedicated management clusters, its obvious that I need to increase the resources within this cluster ASAP.

So, time to add a new ESXi host to the management cluster….

I’ve been looking around a lot and doing some research in to the options I have available, I’ve considered buying a OEM server (HP and Dell has few SMB servers for reasonable prices) but whats obvious is while the initial server within a minimum spec seems cheap enough, the moment you wanna add some memory to make it useful, the costs jump up massively (nice try HP & Dell…!!)

So, similar to the existing boxes I’ve decided to build another whitebox…. Having done some research, I’ve come up with the following key components as the best fit for a reasonable cost… I will aim to justify why for each item

- Intel Xeon E5-2620 V2 (Ivy Bridge EP) – Cost around £300

- Justification: The most important thing this gives me is the thread count. Its a 6c CPU with HT, giving me a total of 12 threads which is great for more VM’s. And its not badly priced compared to other options available. I did look at the Intel core i7’s again (which is also in the VMware HCL) but none (recent models) comes with more than 4 cores which potentially limit my VM density. Core i7 extreme would have been an option but the price and the age ruled that out. Xeon E3 were also limited to 4 cores and the E7’s were astronomically expensive for a Home Lab so no go there. Xeon E5 seemed to be the best option available and E5 version 2 processors seems to strike the best balance between the core count & core speed & the cost. The one I’ve chosen, E5-2620 V2 has the best mix and a very low price. If you are concerned about the power usage, its got one of the lowest TDP (Thermal Design Power) requirement which is 80W.

- MSI X79A-GD65 (8D) Motherboard – Cost around £160

- This was a key part of the system and I settled for a single socket motherboard but the most important requirement was that it had the memory scalability up to 64GB (which this does thanks to the 8 DIMM slots). The Intel X79 chipset has built in RAID (AHCI) is great as it enables vSAN support (ESXi 5.5 U2 has AHCI drivers) so I can test that out too.

- 64GB RAM (non ECC) – Cost around £340

- This was just easy. I needed as much ram as I could get in for a reasonable cost and non ECC was obviously cheaper. Would have preferred to have got 128GB ram but I felt the cost was a little too high.

I initially spent quite a lot of time looking at the inter-compatibility of the components the hard way by reading the documentation for each components before accidentally coming across the site http://pcpartpicker.com/. This site was brilliant in that you can start of with, say the CPU and it will automatically show you what other components are compatible with it so you can select from a pre-vetted compatible list of components. Once you select an item it also show you various prices for each item from a multiple of online sources that you can directly jump to if you wanna order (I did search for pricing outside of the listing given by the site to double check and most of the ones were cheaper through the sources suggested through this site.

If you wanna see the complete build of my Whitebox, see the link below where it let you publish the full configs and the approximate cost based on the cheapest available online (which was pretty accurate) – All for under £1000 which wasn’t too bad in my case.

http://uk.pcpartpicker.com/user/chanakaek/saved/qbgZxr

I think this site is awesome and hopefully would help you quite easily build your own whitebox if you need it. There’s a US, UK, Australia and few other countries version of this site available so it uses local suppliers for costs calculations.

Note: I bough my case from elsewhere though as I needed a rack mount server case and I’d bought the same before for my existing servers. That can be found here.

Hopefully this was useful if you are planning to build your own ESXi whitebox.

Also, have a look at the Frank Denneman’s blog about his Home Lab here, I think the motherboard he’s used is pretty awesome with the built in 10gbe ports and memory scalability up to 128GB

Cheers

Chan