I’ve attended the vSphere Troubleshooting Workshop (5.5) this week at EMC Brentford (delivered by VMware Education) and found the whole course and the content covered to be a good refresher in to some key vSphere troubleshooting commands & tools available that I have used often when troubleshooting issues. And since they say sharing is caring, (with an ulterior motive of documenting it all in one place for my own future reference too), I thought I would summarise the key commands and tools covered in the course, with some additional information all in one place for the easy of reference.

First of all, a brief intro to the course…,

The vSphere Troubleshooting course is not really a course per se, but more a workshop which consist of 2 aspects.

- 30% Theory content – Mostly consist of quick reminders of each major component that makes up vSphere, their architecture and what can possibly go wrong in their configuration & operational life.

- 70% Actual troubleshooting of vSphere issues – A large number of lab based exercises where you have to troubleshoot a number of deliberately created issues (issues simulating real life configuration issues. Note that performance issues are NOT part of this course). Before each lab, there’s a pre-configured powerCLI script you need to run (provided by VMware) which breaks / deliberately mis-configure something in a functioning vSphere environment and it is then your job to work out what was the root cause and fix it. Another powerCLI script is run at the end will verify that you’ve addressed the correct root cause and fixed it properly (as VMware intended)

A little more about the troubleshooting itself first, during the lab exercises, you are encouraged to use any method necessary to fix the given issues such as command line, GUI (web client), VMware KB articles….. But I found the best experience to be to try and stick to command line where possible, which turned out to be a very good way of giving myself a refresher on all the various command line tools and logs available within VMware vSphere, yet I don’t get to use often in my normal day to day life. I attended this course primarily because its supposed to aid towards the preparation of the VMware VCAP-DCA certification I’m planning to take soon and if you are planning for the same, unless you are in a dedicated 2nd line or 3rd line VMware support role in your daily life where you are bound to know most of the commandlets by heart, I’d encourage you to attend this course too. It wont give you very many silver bullets when it comes to ordinary troubleshooting but it makes you work over and over again with some of the command line tools and logs you previously would have used very occasionally at best. (for example, I learnt a lot about the various use of esxcli command in the course which was real handy. Before the course, I was aware of the esxcli command, and have used in few times to do couple of tasks but never looked at the whole hierarchy and their application to troubleshoot and fix various vSphere issues)

It may also be important to mention that there’s a dedicated lab on setting up SSL Certificates for communication between all key vSphere components (a very tedious task by the way) which some may find quite useful.

So, the aim of this post is to summarise some key commands covered within the course, in a easy to read hierarchical format which you can use for troubleshooting VMware vSphere configuration issues, all in one place. (if you are an expert of vSphere troubleshooting, I’d advice on taking a rain check on the rest of this post)

The below commands can be run in ESXi shell, vCLI, SSH session or within the vMA (vSphere Management Assistant – highly recommend that you deploy this and integrate it with your active directory)

-

Generic Commands Available

- vSphere Management Assistant appliance – Recommended, safest way to execute commands

- vCLI commands

- esxcli-* commands

- Primary set of commands to be used for most ESXi host based operations

- VMware online reference

- esxcli device

- Lists descriptions of device commands.

- esxcli esxcli

- Lists descriptions of esxcli commands.

- esxcli fcoe

- FCOE (Fibre Channel over Ethernet) commands

- esxcli graphics

- esxcli hardware

- Hardware namespace. Used primarily for extracting information about the current system setup.

- esxcli iscsi

- iSCSI namespace for monitoring and managing hardware and software iSCSI.

- esxcli network

- Network namespace for managing virtual networking including virtual switches and VMkernel network interfaces.

- esxcli sched

- Manage the shared system-wide swap space.

- esxcli software

- Software namespace. Includes commands for managing and installing image profiles and VIBs.

- esxcli storage

- Includes core storage commands and other storage management commands.

- esxcli system

- System monitoring and management command.

- esxcli vm

- Namespace for listing virtual machines and shutting them down forcefully.

- esxcli vsan

- Namespace for VSAN management commands. See the vSphere Storage publication for details.

- vicfg-* commands

- Primarily used for managing Storage, Network and Host configuration

- Can be run against ESXi systems or against a vCenter Server system.

- If the ESXi system is in lockdown mode, run commands against the vCenter Server

- Replaces most of the esxcfg-* commands. A direct comparison can be found here

- VMware online reference

- vicfg-advcfg

- Performs advanced configuration including enabling and disabling CIM providers. Use this command as instructed by VMware.

- vicfg-authconfig

- Manages Active Directory authentication.

- vicfg-cfgbackupBacks up the configuration data of an ESXi system and

- Restores previously saved configuration data.

- vicfg-dns

- Specifies an ESX/ESXi host’s DNS configuration.

- vicfg-dumppart

- Manages diagnostic partitions.

- vicfg-hostops

- Allows you to start, stop, and examine ESX/ESXi hosts and to instruct them to enter maintenance mode and exit from maintenance mode.

- vicfg-ipsec

- vicfg-iscsi

- vicfg-module

- Enables VMkernel options. Use this command with the options listed, or as instructed by VMware.

- vicfg-mpath

- Displays information about storage array paths and allows you to change a path’s state.

- vicfg-mpath35

- Configures multipath settings for Fibre Channel or iSCSI LUNs.

- vicfg-nas

- Manages NAS file systems.

- vicfg-nics

- Manages the ESX/ESXi host’s NICs (uplink adapters).

- vicfg-ntp

- Specifies the NTP (Network Time Protocol) server.

- vicfg-rescan

- Rescans the storage configuration.

- vicfg-route

- Lists or changes the ESX/ESXi host’s route entry (IP gateway).

- vicfg-scsidevs

- vicfg-snmp

- Manages the Simple Network Management Protocol (SNMP) agent.

- vicfg-syslog

- Specifies the syslog server and the port to connect to that server for ESXi hosts.

- vicfg-user

- Creates, modifies, deletes, and lists local direct access users and groups of users.

- vicfg-vmknic

- Adds, deletes, and modifies virtual network adapters (VMkernel NICs).

- vicfg-volume

- Supports resignaturing a VMFS snapshot volume and mounting and unmounting the snapshot volume.

- vicfg-vswitch

- Adds or removes virtual switches or vNetwork Distributed Switches, or modifies switch settings.

- vmware-cmd commands

- Commands implemented in Perl that do not have a vicfg- prefix.

- Performs virtual machine operations remotely including creating a snapshot, powering the virtual machine on or off, and getting information about the virtual machine.

- VMware online reference

- vmware-cmd <path to the .vmx file> <VM operations>

- vmkfstools command

- Creates and manipulates virtual disks, file systems, logical volumes, and physical storage devices on ESXi hosts.

- VMware online reference

- ESX shell / SSH

- esxcli-* commandlets

- Primary set of commands to be used for most ESXi host based operations

- VMware online reference

- esxcli device

- Lists descriptions of device commands.

- esxcli esxcli

- Lists descriptions of esxcli commands.

- esxcli fcoe

- FCOE (Fibre Channel over Ethernet) commands

- esxcli graphics

- esxcli hardware

- Hardware namespace. Used primarily for extracting information about the current system setup.

- esxcli iscsi

- iSCSI namespace for monitoring and managing hardware and software iSCSI.

- esxcli network

- Network namespace for managing virtual networking including virtual switches and VMkernel network interfaces.

- esxcli sched

- Manage the shared system-wide swap space.

- esxcli software

- Software namespace. Includes commands for managing and installing image profiles and VIBs.

- esxcli storage

- Includes core storage commands and other storage management commands.

- esxcli system

- System monitoring and management command.

- esxcli vm

- Namespace for listing virtual machines and shutting them down forcefully.

- esxcli vsan

- Namespace for VSAN management commands. See the vSphere Storage publication for details.

- esxcfg-* commands (deprecated but still works on ESXi 5.5)

- vmkfstools command

- Creates and manipulates virtual disks, file systems, logical volumes, and physical storage devices on ESXi hosts.

- VMware online reference

-

Log File Locations

- vCenter Log Files

- Windows version

- C:\Documents and settings\All users\Application Data\VMware\VMware VirtualCenter\Logs

- C:\ProgramData\Vmware\Vmware VirtualCenter\Log

- Appliance version

- VMware KB for SSO log files

- ESXi Server Logs

- /var/log (Majority of ESXi log location)

- /etc/vmware/vpxa/vpxa.cfg (vpxa/vCenter agent configuration file)

- VMware KB for all ESXi log file locations

- /etc/opt/VMware/fdm (FDM agent files for HA configuration)

- Virtual Machine Logs

- /vmfs/volumes/<directory name>/<VM name>/VMware.log (Virtual machine log file)

- /vmfs/volumes/<directory name>/<VM name>/<*.vmdk files> (Virtual machine descriptor files with references to CID numbers of itself and parent vmdk files if snapshots exists)

- /vmfs/volumes/<directory name>/<VM name>/<*.vmx files> (Virtual machine configuration settings including pointers to vmdk files..etc>

-

Networking commands (used to identify and fix network configuration issues)

- Basic network troubleshooting commands

- ping

- Good old ping.

- Need I say anymore?

- vmkping

- Ping from vmkernal port groups.

- Useful for IP storage networking troubleshooting

- Can ping from a specific vmkernal interface using the “vmkping -I <vmkernal interface name such as vmk0, vmk1….> <host IP>”

- VMware Online reference API for the command

- esxcli network * commands

- vicfg-* commands (network related)

- Physical Hardware Troubleshooting

- Traffic capture commands

- tcpdump-uw

- Works with all versions of ESXi

- Refer to VMware KB for additional information

- pktcap-uw

- Only works with ESXi 5.5

- Refer to VMware KB for additional information

- Telnet equivilent

- nc command (netcat)

- Used to verify that you can reach a certain port on a destination host (similar to telnet)

- Run on the esxi shell or ssh

- Example: nc -z <ip address of iSCSI server> 3260 check if the iSCSI port can be reached from esxi to iSCSI server

- VMware KB article

- Network performance related commands

- esxtop (ESXi Shell or SSH) & resxtop (vCli) – ‘n’ for networking

-

Storage Commands (used to identify & fix vaious storage issues)

- Basic storage commands

- VMFS metadata inconsistencies

- voma command (VMware vSphere Ondisk Metadata Analyser)

- Example: voma -m vmfs -f check -d /vmfs/devices/disks/naa.xxxx:y (where y is the partition number)

- Refer to VMware KB article for additional information

- disk space utilisation

- Storage performance related commands

- esxtop (ESXi Shell or SSH) & resxtop (vCli) – ‘n‘ for networking

-

vCenter server commands (used to identify & fix vCenter, SSO, Inventory related issues)

- Note that most of the commands available here are Windows commands that can be used to troubleshoot these issues which I wont mention here. Only few key VMware vSphere specific commands are mentioned below instead.

- SSO

- ssocli command (C:\Program Files\VMware\Infrastructure\SSOServer\utils\ssocli)

- vCenter

- vpxd.exe command (C:\Program Files\VMware\Infrastructure\VirtualCenter Server\vpxd.exe)

- Virtual Machine related commands (used to identify & fix VM related issues)

- Generic VM commands

- vmware-cmd commands (vCLI only)

- vmkfstools command

- File locking issues

- touch command

- vmkfstools -D command

- Example: vmkfstools -D /vmfs/volumes/<directory name>/<VM name>/<VM Name.vmdk> (shows the MAC address of the ESXi server with the file lock. it its locked by the same esxi server as where the command was run, ‘000000000000’ is shown)

- lsof command (identifies the process locking the file)

- Example: lsof | grep <name of the locked file>

- kill command (kills the process)

- md5sum command (used to calculate file checksums)

Please note that this post (nor the vSphere Troubleshooting Course) does NOT cover every single command available for troubleshooting different vSphere components but only cover a key subset of the commands that are usually required 90% of the time. Hopefully having them all in one place within this post would be handy for you to look them up. I’ve provided direct links to VMware online documentation for each command above so you can delve further in to each command.

Good luck with your troubleshooting work..!!

Command line rules….!!

Cheers

Chan

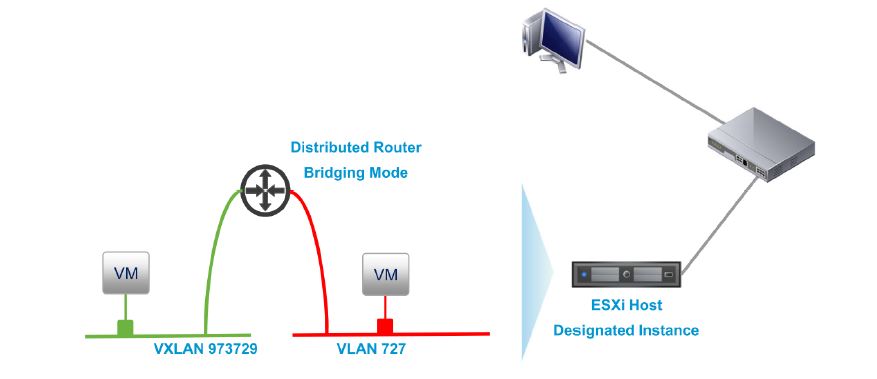

![RP-VXLAN to physical]](http://chansblog.com/wp-content/uploads/2015/03/RP-VXLAN-to-physical-1024x642.jpg)