A little blog on something slightly less technical but equally important today. Not a marketing piece but just my thoughts on something I came across that I thought would be worth writing something about.

Background

I came across an interesting article this morning based on a Gartner research on last years global IT spend where it was revealed that global IT spent was down by about $216 Billion during 2015. However during the same year data center IT spend was up by 1.8% and is forecasted to go up to 3% within 2016. Everyone from IT vendors to resellers to every IT sales person you come across these days, on Internet blogs / news / LinkedIn or out in the field seem to believe (and make you believe) that the customer owned data center is dead for good and everything is or should be moving to the cloud (Public cloud that is). If all that is true, it made me wonder how the data center spend went up when in fact that should have gone down? One might think this data center spend itself was possibly fuelled by the growth in the public cloud infrastructure expansion due to increased demand on Public cloud platforms like Microsoft Azure and Amazon AWS. Make total sense right? Perhaps in the outset. But upon closer inspection, there’s a slightly complicated story, the way I see it.

Part 1 – Contribution from the Public cloud

Public cloud platforms like AWS are growing fast and aggressively and there’s no denying that. They address a need in the industry to be able to use a global, shared platform that can scale infinitely on demand and due to the sheer economy of scale these shared platform providers have, customers benefit from cheaper IT costs, especially compared to having to spec up a data center for your occasional peak requirements (that may only be hit once a month) and having to pay for it all upfront regardless of the actual utilisation can be an expensive exercise for many. With a Public cloud platform, the up front cost is cheaper and you pay per usage which makes it an attractive platform for many. Sure there are more benefits of using a public cloud platform than just the cost factor, but essentially “the cost” has always been the most key underpinning driver for enterprises to adopt public cloud since its inception. Most new start ups (Netflix’s of the world) and even some established enterprise customers who don’t have the baggage of legacy apps, (By legacy apps, I’m referring to client-server type of applications typically run on Microsoft Windows platform), are by default electing to predominantly use a cheaper Public cloud platform like AWS to locate their business application stack without owning their own data center kit. This will continue to be the case for those customers and therefore will continue to drive the expansion of Public cloud platforms like AWS. And I’m sure a significant portion of the growth of the data center spend in 2015 would have come from the increase of these pure Public cloud usage causing the cloud providers to buy yet more data center hardware.

Part 2 – Contribution from the “Other” cloud

The point is however, not all the data center spend increment within 2015 would have come from just Public cloud platforms like AWS or Azure buying extra kit for their data centres. When you look at numbers from traditional hardware vendors, HP’s numbers appear to be up by around 25% for the year and others such as Dell, Cisco, EMC also appear to have grown their sales in 2015 which appear to have contributed towards this increased data center spend. It is no secret that none of these public cloud platforms use traditional data center hardware vendors kit in their Public cloud data centres. They often use commodity hardware or even build servers & networking equipment themselves (lot cheaper). So where would the increased sales for these vendors have come from? My guess is that they likely have come from most enterprise customers deploying Hybrid Cloud solutions that involves customers own hardware being deployed in their own / co-location / off prem / hosted data centres (customer still own their kit) along with using an enterprise friendly Public cloud platform (mostly Microsoft Azure or VMware vCloud Air) acting as just another segment of their overall data center strategy. If you consider most of the established enterprise customers, the chances are that they have lots of legacy applications that are not always cloud friendly. By legacy applications, I mean typical WINTEL applications that typically conform to the client server architecture. These apps would have started life in the enterprise since Windows NT / 2000 days and have grown with their business over time. These applications are typically not cloud friendly (industry buzz word is “Cloud Native”) and often moving these as is on to a Public cloud platform like AWS or Azure is commercially or technically not feasible for most enterprises. (I’ve been working in the industry since Windows 2000 days and I can assure you that these type of apps still make up a significant number out there). And this “baggage” often prevents many enterprises from purely using just Public cloud (sure there are other things like compliance that gets in the way too of Public cloud but over time, Public cloud system will naturally begin to cater properly for compliance requirements…etc. so these obstacles would be short lived). While a small number of those enterprises will have the engineering budget and the resources necessary to re-design and re-develop these legacy app stacks to be a more modern & cloud native stack, most of them will not have that luxury. Often such redevelopment work are expensive and most importantly, time consuming and disruptive.

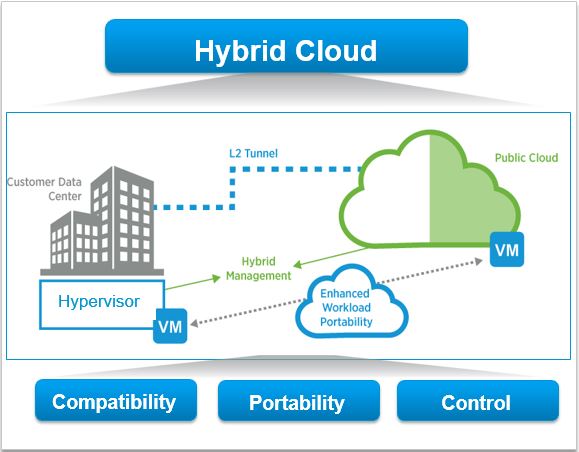

So, for most of these customers, the immediate tactical solution is to resort to a Hybrid cloud solution where the legacy “baggage” app stack live on a legacy data center and all newly developed apps will likely be developed as cloud native (designed and developed from ground up) on an enterprise friendly Public cloud system such as Microsoft Azure or VMware vCloud Air. An overarching IT operations management platform (industry buzz word “Cloud Management Platform”) will then manage both the customer owned (private) portion and the Public portion of the Hybrid cloud solution seamlessly (with caveats of course). I think this is what has been happening in 2015 and this may also explain the growth of legacy hardware vendor sales at the same time. Since I work for a fairly large global reseller, I’ve witnessed this increased hardware sales first hand from the traditional data center hardware vendor partners (HP, Cisco…etc.) through our business too which adds up. I believe this adoption of Hybrid cloud solutions will continue through out 2016 and possibly beyond for a good while, at least until such time that all legacy apps are eventually all phased out but that could be a long while away.

Summary

So there you have it. In my view, Public cloud will continue to grow but if you think that it will replace customer owned data center kit anytime soon, that’s probably unlikely. At least 2015 has proved that both Public cloud and Private cloud platforms (through the guise of Hybrid cloud) have grown together and my thoughts are that this will continue to be the case for a good while. Who knows, I may well be proven wrong and within 6 months, AWZ & Azure & Google Public clouds will devour all private cloud platforms and everybody would be happy on just Public cloud :-). But the common sense suggest otherwise. I can see lot more Hybrid cloud deployments in the immediate future (at least few years) using mainly Microsoft Azure and VMware vCloud Air platforms. Based on technologies available today, these 2 in my view stand out as probably the best suited Public cloud platforms with a strong Hybrid cloud compatibility given their already popular presence in the enterprise data center (for hosting legacy apps efficiently) as well as each having a good overarching cloud management platform that customers can use to manage their Hybrid Cloud environments with.

Thoughts and comments are welcome….!!